Winning the 1X World Model Challenge

26 Nov, 2025· ·

0 min read

·

0 min read

Aidan Scannell

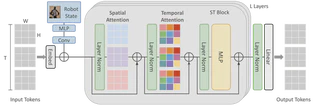

Architecture: Spatio-temporal Transformer World model

Architecture: Spatio-temporal Transformer World modelAbstract

World models constitute a promising approach for training reinforcement learning agents in a safe and sample-efficient manner, but also might form the basis for many other forms of game interactions. We present two pieces of work in this space. First, Amos Storkey will present DIAMOND (DIffusion As a Model Of eNvironment Dreams), a reinforcement learning agent trained in a diffusion world model. We analyze the key design choices that are required to make diffusion suitable for world modeling. We demonstrate how improved visual details can lead to improved agent performance on the Atari 100k benchmark and demonstrate that DIAMOND’s diffusion world model can stand alone as an interactive neural game engine on static Counter-Strike: Global Offensive gameplay. Second, Aidan Scannell will introduce the more advanced designs that enabled us to win the 1X World Model Challenge. In particular we explain the ways we adapted the video generation foundation model Wan-2.2 TI2V-5B for video-state-conditioned future frame prediction in the 1X sampling track, and how we trained a Spatio-Temporal Transformer from scratch for the 1X compression track—achieving 1st place in both.

Event

Huawei AI Application Workshop

Location

Dublin, Ireland