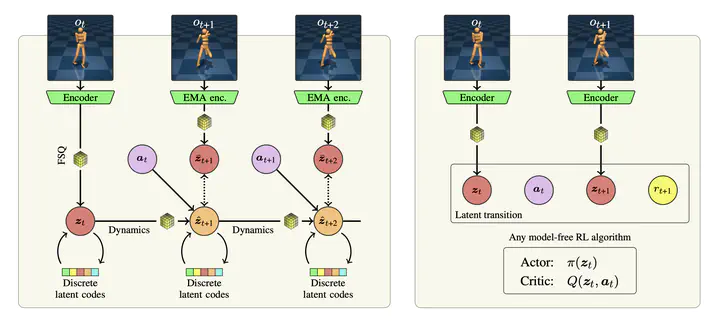

Learning representations for reinforcement learning (RL) has shown much promise for continuous control. In this project, we investigate using vector quantization to prevent representation collapse when learning representations for RL using a self-supervised latent-state consistency loss.