This work derives a novel variational lower bound for the mixture of Gaussian process experts model with a GP based gating network based on sparse GPs.

The model (and inference) are implemented as a package (mogpe) written

in GPflow/TensorFlow.

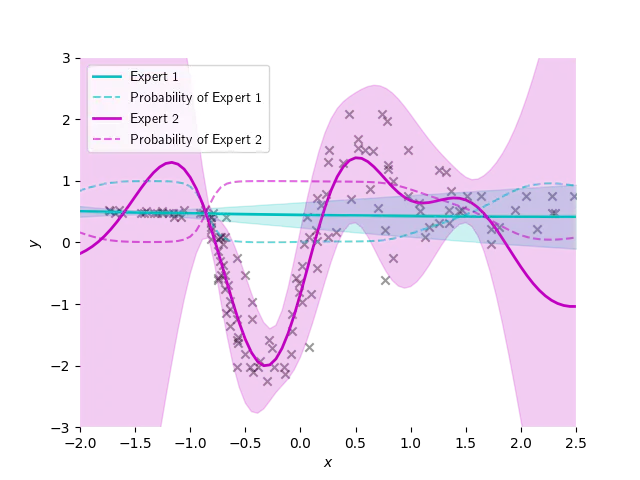

Mixture models are inherently unidentifiable as different combinations of component distributions and mixture weights can generate the same distributions over the observations. This work proposes a scalable mixture of experts model where both the experts and gating functions are modelled using Gaussian processes. Importantly, this balanced treatment of the experts and the gating network introduces an interplay between the different parts of the model. This can be used to constrain the set of admissible functions reducing the identifiability issues normally associated with mixture models. This work derives a variational lower bound that allows for stochastic updates enabling the model to be used in a scalable fashion.