In this work I reimplemented the PILCO algorithm (originally written in MATLAB) in Python using Tensorflow and GPflow. This work was mainly carried out for personal development and some of the implementation is based on this Python implementation. This repository will mainly serve as a baseline for my future research.

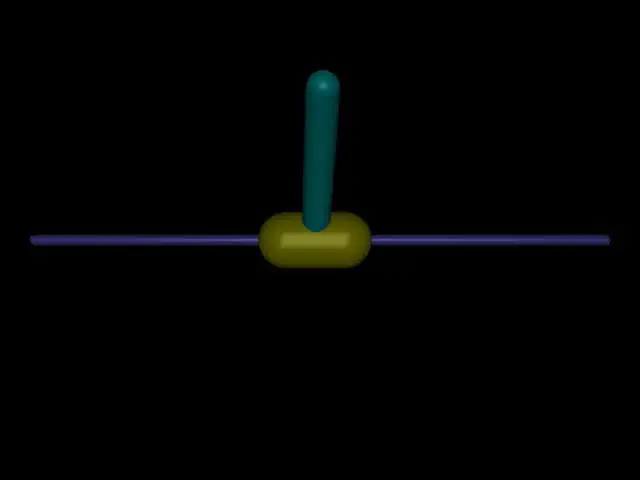

I implemented the cart pole benchmark using MuJoCo and OpenAI. I did this because OpenAI’s CartPole environment does not have a continuous action space and because the InvertedPendulum-v2 environment uses an “inverted” cart pole. The new environment represents the traditional cart pole benchmark with a continuous action space.

The env/cart_pole_env.py file contains the new CartPole class, based on InvertedPendulum-v2. I also created the env/cart_pole.xml file defining the MuJoCo environment for the traditional cart pole.